About

Overview

ViennaProfiler consists of three parts:

- ViennaProfiler-API

The API is implemented in C++ and is used to do benchmarking at source-code-level. For the API a database connection for MySQL is implemented. The profiler class has a generic interface, thus connections to other databases can be integrated easily. - ViennaProfiler standalone executable

The standalone executable is used to do benchmarking at application level (similar to time on Unix-based systems). - ViennaProfiler Web Interface

The Web Interface is written in PHP and Javascript and is based on a MySQL-Database and an Apache-Webserver. The Web Application runs on all common browsers like Mozilla Firefox, Google Chrome, Opera, Konqueror, Apple Safari and Microsoft Internet Explorer. The latter may have a few glitches related to the layout of the Web Interface, but full functionality is available.

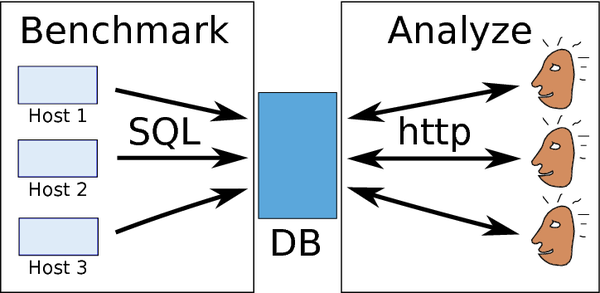

Here an overview of the ViennaProfiler application is given:

|

The left box named "Benchmark" illustrates the use of the benchmarking API or the standalone executable. The arrows indicate that test results are pushed from the host to the database, where the tests are stored. However, if there is no database connection on the host available, tests can also be stored in a file instead of the database and easily import this file via the web interface to the database. This allows the use of ViennaProfiler also for hosts without network connection. The box on the right hand side named "Analyze'' sketches the ViennaProfiler Web Interface. A user can conveniently browse and analyze the tests stored in the database, but also perform maintenance task.

Use Case

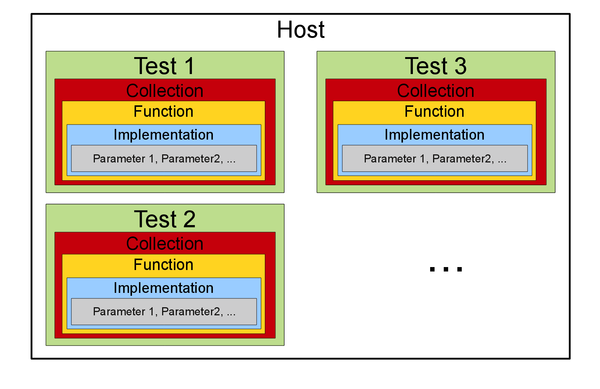

In this section a common use case for ViennaProfiler is discussed. First of all the specific nomenclature of ViennaProfiler is explained very shortly. ViennaProfiler has two major entities:

- hosts...computers where one or more tests are carried out

- tests... contains the result of a benchmark run

Each test is made for a certain implementation of a particular function (or functionality), which is associated with a collection. A collection can e.g. be a program.

Consider that we have two collections, which should be benchmarked:

Collection 1:

- collection: Linear algebra library

- function: Calculate the determinant of a matrix

- implementations:

- Cholesky Decomposition

- LU Decomposition

Collection 2:

- collection: Drawing library

- function: Gauss Smoothing

- implementations:

- provided by somebody

- my own

Here is an example for some test runs on a certain host. Mind that this table is simplified. It doesn't contain the result of the benchmarks and other useful information stored in the real database table:

| Test | Collection | Function | Implementation | Parameters |

|---|---|---|---|---|

| Test1 | Linear Algebra Library | Calculate the determinant of a matrix | Cholesky Decomposition | [matrix_dimension|15] |

| Test2 | Linear Algebra Library | Calculate the determinant of a matrix | LU Decomposition | [matrix_dimension|15] |

| Test3 | Drawing Library | Gauss Smoothing | provided by somebody | |

| Test4 | Drawing Library | Gauss Smoothing | my own | |

... |